Preface

I’ve been looking to start a homelab ever since I started building a router for my home network and I’ve recently started pulling together the bits and pieces I need to run one successfully. The first part of this process is choosing what I want to run everything on and there is a lot of options to say the least. This article is more a knowledge repository on some of the things I kept in mind while meandering through this process. Additionally, it needs to be said, I’m not an expert in any of this and generally haven’t had any of these parts in my hand to test for myself, although I do find it interesting to look into some of the devices and hardware I don’t get to work with at my day job.

When looking at building a homelab, you tend to be inundated with 100 different choices for ever part of the system, and while there are usually better and worse choices, most of the time these seem to have drawbacks in other areas and the idea is to pick “what works best in my situation” so while what I’m going to discuss here are important to me, it’s quite possible you don’t care about them, but it’s probably useful information to keep in mind as researching for this stuff is an involved process involving deep dives into some of the more complex sides of hardware choices.

Some of this should be fairly self-explanatory, as I’m trying to explain from the basics. Additionally, I’m not an expert on any of this, this is just what popped out while I was researching.

Questions to ask

Before buying anything, there are several questions I should have asked myself before getting started. These aren’t exhaustive, but are indicative of what could have saved me some research time.

What’s my budget?

This is one of the first things you could be asking yourself. What you’re doing is building a server, these can cost anywhere from “free”, when using an old computer, to hundreds of thousands of dollars, so picking a general budget and figuring out what you can get for that money is. This seems extremely obvious,. but it’s so easy to find that you need another part, spend the money and suddenly realize you’ve spent triple your budget, using parts that you didn’t need, but wanted. Additionally, it might be a good idea to spend under your max as there’s always something you miss when making the initial purchase.

What do I want to do?

There are a huge number of different servers that could be built, but generally we’re talking about a NAS to serve files and an application/web server to host applications. This choice can be important because the it base software running on the machine, which I’m not currently discussing in this article. While this can be on the same machine, it does need to be said there are some pitfalls from doing this. For myself, while I’d like to run separate servers for file and applications, my budget only stretches to one of the 2 servers. However, I’ve also made the decision to keep my storage drives separate from the running of my containers. This generally means using network shares, which has some performance implications but I think in my case, it should be ok.

Where are my devices going to be located?

This will be discussed in more depth later, but servers can be extremely noisy. This probably doesn’t matter if the server is going in the basement or a garage, if it’s going somewhere you’ll be in pretty often, this could be a primary consideration. For example, my own server is going in the living room, so it’s fairly important, but not quite to the level of critical as if it was in my office.

Where can I find the parts I need?

There’s the standard IT retailers if you’re looking for new gear, such as SCAN in the UK or Amazon. If you’re looking for second hand eBar is usually a great resource, but it can be a little difficult to search for specific home lab hardware, so sites like LabGopher can help. There are also more specific retailers like bargain hardware in the UK.

Do I have something old I can use to get started?

Actually working with something that isn’t particularly important was really helpful for figuring out if my choices were valid for my situation, even if the performance wasn’t up to what I wanted for the real system. For myself, I used a combination of my current desktop to check the software I was planning to run. This was a windows machine, but you can get most things running via the WSL or other options. Additionally, I had an old desktop lying around that I could use for testing networking and the bare metal linux OS I wanted to use.

General Options

This section is some of the options I looked found when I was looking for more information on various hardware choices

Commercial vs Enterprise

I’m mainly viewing commercial vs enterprise to mean desktop chips vs server/workstation chips as these tend to be the markets they focus on.

Within the server space, there’s a much more expansive list of parts that can be used, and one of the easier to discuss the expanded parts list is to compare between commercial products and products sold to an enterprise, which usually have very different requirements, expanded feature sets and usually large price premiums. Within this, the focus tends to be placed on the CPU manufacturer. I.e.: the most common way to base a system, is on an AMD or Intel platform. Figuring out whether it’s commercial is fairly easy as it’s most often distinguished by the processor family, such as AMD “Ryzen” being commercial and “Epyc” being server models. Similarly intel uses “Core” for it’s commercial products and “Xeon” for it’s server chips. There are a lot of other types for example, my router uses a Celeron processor from Intel. It should be noted, there’s a lot of overlap between the feature sets and either can be used in a server build.

When looking at enterprise parts, it can be a little difficult to figure out what they do as the most information you get off listings is a part number, but I’ve found just googling said part number brings up a lot more information about what it does and how it differs from some of the other choices you could pick.

While it’s possible to get hold of individual parts for a server (such as mother and CPU) combo’s, it’s far more common to see full systems built by companies like HPE, Dell and Super Micro, among others.

One thing that might be worth mentioning, is that server’s are optimized to run 24/7, 365 days a year, but how much that’s worth in a homelab, I’m not sure.

Used or New

If you’d like to build a server using enterprise parts, it’s highly likely you’ll be looking at used parts over new as they’re so much more expensive to buy new. These generally still work just fine and companies regularly dump old server hardware onto the second hand market when they start to leave support. Currently, it feels like the “most common” full systems to find, involve Xeon E5 v4 chips, which date from 2016. Another common option is a “TinyMiniMicro”, which are essentially brand names of some of the mini pc’s that offices used to run their business, due to the smaller size of the systems.

There are also “hybrid” deals that can be offered by brands such as Machinist which sells new x99 motherboards, with used Xeon chips.

Compatibility in enterprise systems

While “right to repair” is being talked about a lot in the consumer space, this isn’t really a thing in enterprise. These devices are usually bought with support contracts in place to reduce risk, and verifying hardware works with each other can be a matter of certification. As such, you can find issues where parts just won’t work together based on how the manufacturer has built the device, up to and including proprietary cables and mounts within the server itself which can stop you from doing things like replacing fans or running certain pcie devices, or ramping fans up to max when you don’t use a “genuine” hard drive. Usually, there is lots of discussions on community forums about what will and won’t work in your server, as well as workarounds, but it’s generally a good idea to check before you buy.

Case form factor

unlike desktops, servers generally come in either a rack-mount or tower format. This does have some implications in other areas of the system, but generally rack-mount are more space efficient as you can stack equipment vertically, but if you’re only planning to run 1 or 2 servers, the additional space required to house a server rack. There are also blades, but these are pretty specialized and I didn’t want to start my home lab with one. Tower servers, by contrast are like traditional desktop towers, you can also get smaller ITX sized towers, but these tend to have less horsepower than the larger towers and servers. Additionally, some manufacturers like HPE offer rackmount kits for their tower servers. If you’d like to rack mount commercial hardware, there are rack-mount cases available, but these can be thinner than standard tower CPU coolers, so this should be checked.

Racks

There’s some terminology related racks that I think it’s important to understand. Server racks are used for many different applications such as networking, audio and servers and they all generally have the same width of 19” with varying heights and depths. Checking the length of the server you want to rack mount is common a pitfall as networking cabinets can have a depth of 500mmm, whereas servers can be longer than 1m in some cases. Finally, servers are measured by how much stuff you can stack into them using “rack units” (also known as “U”). I.e.: a 24U server rack can fit 24 1U rack mounted components and a standard full size server rack is 48Um, while if you wanted to fit under a desk you’d probably be looking at around 15U - 17U. You can use a rack unit calculator to get an idea of how tall the rack is going to be.

Noise

As eluded to earlier, servers can be noisy and you can usually find servers referred to as “Jet Engines”. This is due to a number of factors, such as using passive CPU coolers, to the size of the system. If noise is a concern, then consumer grade hardware might be better choice and if not, a tower server is usually quieter than a rack mount. The worst are usually 1U rack mount servers due to having smaller (and thus noisier) fans and limitations on space in the chassis.

Architecture

When building out a homelab, a popular starting choice is to use something a single board computer (SBC) like a raspberry pi as a cheap way to get started. However, one of the pitfalls of this approach is that a lot of SBC’s is they run ARM as opposed to the more popular x86 architecture and applications do need to be compiled to work on either of these, meaning that some software just won’t work on ARM. To be clear, I do really like SBC’s and there’s a lot of software which does support ARM natively, it’s work checking if the applications you want to run work on the architecture you’re running. If the software doesn’t work on the architecture you have, then there are workarounds, such as using an emulator like QEMU, but this can impact performance.

Workstations

When browsing for hardware, you can come across workstations that use the same processors as their server counterparts, while being cheaper than the equivalent. This was an option I did consider heavily as I’d be able to get abetter server for less money however, there are a few caveats to this approach, typically workstation chassis’s support a maximum of 4 3.5” hard drives and usually only 2, meaning you can be limited on total hard drives without using expansion cards. Additionally, most workstations lack an integrated GPU (igpu) on the CPU, meaning a graphics card needs to be purchased for the system and running a virtual machine that requires a GPU is much more complicated when there’s only 1 GPU in the system.

Intel vs AMD in enterprise hardware

Realistically, both Intel and AMD can be used to build out a server successfully, but within the server market Intel is far more popular as shown by CPU benchmark. However, from what I could see, Intel drivers for Linux are considered more mature than AMD, with features such as QuickSync, but there are equivalents in AMD.

Transcoding

This is going to be discussed in more detail below, but transcoding is the process of decoding a file and then encoding again in a different format, and usually referenced in regards to video conversion. This is useful for a few things, but mainly used in a homelab for the following:

- encoding files into a more efficient (and smaller) format

- most devices support playback in specific formats, transcoding can be used to convert videos in real-time if the source file is not correct

Transcoding can be done using the CPU in software transcoding and a GPU via hardware encoding, and is one of the main reasons to have a GPU in a home lab.

It should be noted there are workarounds to not do transcoding, by either disabling it, or using something like direct play.

Power draw

Using a power calculator, a server that uses 150W on average will cost approximately £380 a year, based on the January energy price cap in the UK. As such, this counterintuitively can mean a more expensive system can cost a lot less. This is also one of the reasons you see consolidation upgrades where somebody goes from lots of big servers, to a single smaller server. Additionally, this is one of the reasons not to pick older computers, as the combination of efficiency and power draw makes using these systems less viable.

CPU choices

While the CPU has been discussed a fair amount in the previous sections due to the way we refer to the hardware, there’s several things I kept in mind specifically for CPU choice which wasn’t effected by the brand.

Cores

core count was one of the things I prioritized when searching for a CPU to use as more cores mean you can run more virtual machines due to having to allocate cores to a machine. However, with the rise of multi threaded workloads most CPU’s you can get today do run have lots of cores. Additionally, most mainstream CPU’s use simultaneous multithreading (SMT) to “double” the number of cores available for use in a system. For example, I used a i7 6700k in testing, which is 4 core processor that allowed me to use 8 cores for various applications. Though it does need to be said, these additional threads are not the same as physical cores, which will always be better than taking advantage of SMT. You can tell the processer has this in the description of the CPU, where it will say something like 4 cores (8 threads).

CPU overall performance

Figuring out performance of a standard consumer chip was pretty straightforward for myself as I had a pretty good understanding of what the difference was between various desktop chips, such as the difference between Ryzen 7 and 9 or Intel i7 and i9, where while the model numbers change a bit, overall, bigger numbers = newer/faster. This isn’t quite the case in the enterprise market. For example, an AMD EPYC 7551 is from 2017, whereas the AMD EPYC 7432 is from 2021. These CPU models are following a convention that makes sense, but for the layman looking at these components for the first time, it can be a little confusing. Therefore, my approach when I found a model I didn’t understand, my best bet was to google the model number and look at the CPU benchmark link. Additionally, Wikipedia is pretty good for getting full lists of releases for specific processors.

Software Transcoding

From what I can tell, a CPU will require a passmark score of between 12000 - 17000 to run transcoding on 4k via the CPU, which is very achievable with most modern CPU’s today. Keep in mind though, that using a CPU to transcode will never be as good as using a GPU, and can have significant slowdowns even when exceeding the requirements.

Single core performance

Single core performance is definitely important, but it’s not something I heavily considered when looking at what CPU to use. I mainly used single core performance between chips of similar core counts i.e.: I’d be more likely to choose a much slower 32 core processer, over a very fast 4 core processor.

Hard drives

Hard drives are a really important part of choosing a homelab, but more in terms of price than compatibility and price per terabyte. When I was looking for hard drives, I used disk prices to work out what had the best price per terabyte, then compared those drives on PriceRunner to see if I could get a better deal. I ended up with a pair of 16TB Seagate EXOS drives as my primary storage, and a pair of 1Tb SSD’s as boot drives.

RAID

Briefly, RAID is a way of providing redundancy to data drives by duplicating data, so that if you get drive failures you don’t lose data.

Honestly, hardware RAID has fallen heavily out of favour in a home lab as very competent software solutions exist to perform RAID, so I’ll discussing my RAID solutions in a later article around software.

Used/recertified hard drives

Large capacity drives can be extremely expensive, a way to get around this is to pick up a hard rive that’s either been used and then sold on, possibly with some maintenance performed. Alternatively, you can usually pick up some hard drives attached a system when buying second hand off eBay. Thoughts on whether this is a good thing to do are mixed, but generally say that if you do, you should keep backups of the data in case. For myself, I had enough money set aside to start off with, so I bought new drives, but can definitely see the utility of keeping used drives in my system.

Another way to get hold of cheaper drives is to look for “recertified” or “white label” drives. There’s some differences between the two, but that’s outside the scope of this article.

For me, this is ultimately about managing risks, and what works in a production environment (where any of these options wouldn’t be accepted) isn’t necessarily want in my home, i.e.: the max amount of reasonably safe storage for the lowest price. Even buying new drives still has a failure rate, so it’s worth being careful either way.

SAS vs SATA

SAS and SATA are the 2 main forms of storage interfaces you will find, and there’s an in depth article on the differences here. Essentially, both are hard disk drives (HDD), SAS drives are built for enterprise and run faster, with more monitoring utilities, whereas SATA is the standard consumer interface for spinning platters. In general, you need to check what the interface on the controller is, as this should tell you whether it accepts SAS or SATA. However, usually a SAS controller will work with SATA drives, but a SATA controller won’t work with SAS drives due to the voltage range. It also needs to be said, that SAS drives are more expensive for the same storage.

There is an additional interface known as fibre channel (FC), but I wasn’t looking to use these as they’re quite expensive, require separate interfaces to support and aren’t used on newer servers, so I’ve excluded them.

Hard drive form factor

Outside of things such as M.2 or NVMe, hard drives have consolidated around 2.5” and 3.5” sizes, which I found frequently referred to as small form factor (SFF) and large form factor (LFF). While in the consumer market we generally see HDD’s are LFF and and SSD’s as SFF, SAS drives are commonly found in SFF, which is why you can find a lot of servers offering SFF hard disk cages built into the chassis, and it’s something which has caught people out before.

SMART monitoring

SAS drives do have better monitoring available, but it’s still possible to check the health of SATA drives. One of the standard tools for doing this, is SMART which can give you a simple pass/fail check on the state of your hard drives.

Shingled magnetic recording

Shingled magnetic recording (SMR) and conventional magnetic recording (CMR) are 2 f the major technologies used in how data is written to a spinning hard disc. The exact differences between them can be seen here, but for our purposes, SMR is cheaper to manufacture, but designed for archival use and don’t perform well in RAID setups due to how SMR has to reorganize data. As I am planning to run a RAID setup, I avoided SMR drives.

A few years ago, it was found that certain manufacturers were selling SMR drives for a NAS without disclosing what they were. Fortunately, this scandal did result in all drives being labelled, but it can be easy to miss. Additionally, tables like this from Seagate are helpful:

vibration

Spinning hard drives are sensitive to vibration, how damaging this is, is up for discussion, but it’s likely that lots of vibration reduces performance and shortens the lifespan of disc. As such, it’s recommended to try to avoid placing drives in a place they can get a lot a of vibration. Conversely, solid state drives are not nearly as susceptible to vibration. For me, this generally means don’t stick a bunch of loose hard drives in the bottom of the case.

RAM

Error correction code

Error correction code (EEC) RAM has a more in depth discussion here, but it essentially has the ability to manage and correct memory errors in the RAM, reducing the occurrence of crashes and in more extreme cases, data corruption. This is primarily used in the enterprise space, and the motherboard has to specifically support ECC RAM in order to use it. There are a few consumer CPU’s/motherboards that support ECC, but most don’t.

This is one of the most contentious arguments in the homelab space, with opinions ranging from absolutely critical to basically snake oil however, most opinions I saw, was that it’s nice to have, but not critical to operations. This calculus does change in production environments though.

On-die ECC

This is part of the DDR5 RAM spec, but for most intents, doesn’t provide the protection that ECC is meant to provide.

Motherboard

The motherboard wasn’t something I looked into heavily, outside of noting that using a server manufacturer’s motherboard, it will likely come with a fair number of proprietary connections and the number of hard drive ports on the motherboard. If I was looking at a consumer board, then 4 ports is common, which is less than I’d want in the long term. However, this can be coupled with NVMe drives on more modern motherboards and it’s also possible to buy expansion cards that will be discussed later.

PCIe

PCIe is pretty important in a server due to the large number of add-on cards that can be used for a server, which aren’t found often in computers built for consumers. More explanation on what these are can be found here

Lanes

PCIe lanes are counted as a combination of lanes provided by the CPU and the motherboard chipset, with those coming from the CPU considered to be faster than the motherboard, due to the direct connection. Typically, consumer hardware has a lot lower number PCIe lanes than enterprise. For example, most latest generation consumer CPU’s provide 24 lanes and the motherboard adds 0 lanes. This does change year on year though, for example when SLI was popular, motherboards came with a lot more PCIe lanes. A common card to put into a PCIe slot is a graphics card, which usually come in an x16 variant of PCIe, meaning it takes up 16 lanes.

Another thing to mention, is that you can go down PCIe lanes but not up, i.e.: an x8 card can sit in an x16 slot, but not vice versa.

As mentioned above, there tend to be a lot more lanes available on enterprise hardware due to the need for additional cards put into a server, some of the more common ones are discussed below. Additionally, in the consumer space you can often find that the motherboard has multiple x16 slots, but they’re wired to use x4 or x8, or even switch off entirely based on the usage of other slots. However, an x8 (or even x16) can be plugged into this slot, it will mean the card will be limited to the bandwidth of the slot though.

Version

The version of the PCIe lane can be important as as the generation increases, the bandwidth doubles. For example an x1 lane in PCIe v1, supports 250MBps, whereas v2 supports 500MBps. This is explained in more depth here. It’s also worth mentioning, that any generation of PCIe card is designed to work with any generation of PCIe slot, but the maximum transfer speed will be at the lower of the two.

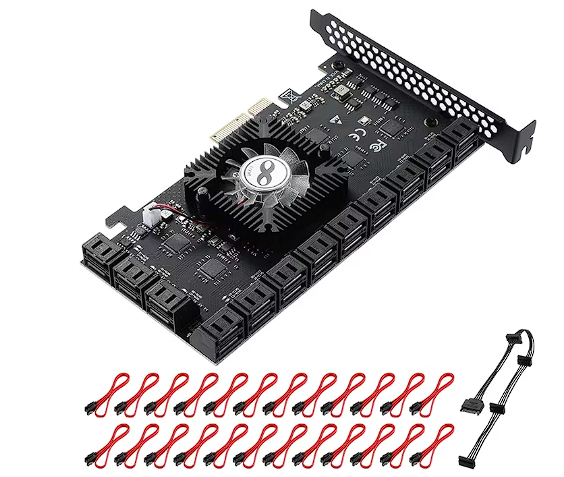

Host bus adapters

Host bus adapter’s (HBA) are how you see so many hard drives attached to a single system. What they do, is essentially provide a number of additional ports for hard drives. These are most often seen to provide between 4 - 16 lanes at speeds of 6 to 12Gbps of transfer, depending on the model and age, but ill typically take up an x8 slot. HBA cards are also one of the ways to add SAS drives to a SATA motherboard as the controller is on the HBA card itself.

Huge drive numbers

Occasionally, you will come across HBA cards that show huge numbers of drives, like this v4 card that can support 1024 drives. Obviously, it doesn’t have space for 10245 connectors, but this is due to multiple drives sharing the same SAS lane on the HBA. However, to take advantage of this, more hardware is needed, like a disk shelf to actually take advantage of that many drives. Disk shelves are also called JBODs, which stands for Just a Bunch Of Disks.

IT mode

Most HBA cards are sold by Broadcom/LSI (or are LSI cards, but branded by the manufacturer) and by default, they run in a mode called Integrated RAID (IR). As it says on the tin, this allows the HBA to function in a RAID configuration. At best, this can slow your HBA down, and at worst, present all drives to the system as a single block of storage, which isn’t useful if you’re planning to do software RAID anyway. As such, it’s considered a better choice to buy a card that’s been flashed to initiator target (IT) mode, where the firmware presents all drives to the system with as little in-between as possible. This does mean cards that have been pre-flashed to IT mode do command a slight price premium, and it is possible to flash the HBA yourself if you want.

Connection form factor

It’s much less common to find a (reputable) HBA which has direct SATA connections on the board itself (though I did find a few on Amazon) and far more likely to find something with a connection like mini-SAS and many versions connection. Ultimately, the listing should say what the form factor is, or alternatively searching for the card on Google will tell you what the connection is. From there it’s pretty easy to find cables that will connect to at least 4 SATA drives, such as SFF-8088 to SATA

24 ports of SATA on an x4:

Network interface cards

Usually referred to as a NIC, these are a way of adding more ports, or a faster internet connections to the computer. I didn’t need one, so I didn’t spend much time looking at them, outside of to note that you can NIC’s generally come with standard RJ-45 ports, or with some form of SFP port.

GPU

Within a server, a GPU is used for several purposes, but for my use case I was most interested either the hardware acceleration, or if I bought a server without integrated graphics, to use it for initial setup. There’s a lot of information about what GPU to use for hardware transcoding. For example, if you’re interested in doing transcoding in the AV1 codec, you’ll need either Intel Ark GPU, or the latest AMD or Nvidia graphics cards. This goes similarly for x.265 or x.264, just with respectively earlier generations.

Another thing to take into account is the number of streams that a GPU can run concurrently, which is useful for streaming footage around your house. Fortunately, I did find this webpage for figuring out how many concurrent streams a specific Nvidia GPU can support.

Finally, it needs to be noted that the setup of a GPU in Linux can be a far more involved process than Windows and support can be limited.

NVMe

NVMe drives are faster than SATA and as a consequence makes a good choice for a boot drive. However, but each NVMe drive does take up an x4 slot on PCIe lanes. My plan is to use this for boot drives, if they are available on my system, but it does appear that the most common second-hand servers I can find don’t have support for NVMe drives directly. Alternatively, I can buy a carrier card that can add NVMe drives to a server that doesn’t typically have them.

__79622_zoom.jpg)

Other

There’s a huge range of additional uses for a PCIe lane such as tv tuners, sound cards and capture cards. All of which are useful in certain setups, but in the interest of keeping this already long article somewhat reasonable, I’m just lumping them all together here.

Where to find a server

Buying new

While researching, I felt this is the “simplest” route as you can just pick out the exact parts you want, as opposed to fitting what’s available in the second market, into what you want. On the other hand, new gear can hold a significant price premium.

For consumer hardware, I do usually build my computers, so I take advantage of pc part picker to map out whatever I’m looking to build. If looking for server hardware, most retailers (such as Ebuyer) will sell them, or alternatively going directly to a manufacturer like HPE (albeit via email sails).

Buying used

As discussed, buying second hand can be a great way to get an excellent deal on some interesting parts, but can also be a little harder to find exactly what you want, and there can be issues around those parts from being heavily outdated, to weird “quirks” (i.e.: there’s probably a reason it’s so cheap).

There’s a few avenues for searching for used hardware online. One if the most common places however, is eBay. EBay can be pretty hard search for specific parts, so there are sites like LabGopher that can be used to help filter out junk and find decent deals.

Alternatively, there a specific specialist retailers, such as Bargain Hardware in the United Kingdom. I’ve also found that these retailers will also sell parts on eBay, but the direct website can offer better customization.

Final Thoughts

There’s so many options to look at, which can be extremely confusing about knowing what will work with various configurations, and while it might be spoilers on my next article, I did end up going with consumer hardware, because I was able to get newer parts (and thus more efficient) than the equivalent I could find used, as well as some pretty great discounts while I was looking.